Teleportation Through Virtual Environments

“Teleportation” is an NSF funded project aimed at investigating the cognitive process surrounding navigating virtual environments. My role in this project was to design a completely online VR user study to collect teleportation data during the COVID-19 pandemic.

Problem Statement

Before the COVID-19 pandemic, the grand majority of VR research was conducted in controlled lab environments. In order to maintain health and safety, our team decided to design a study that could be conducted completely online using the participants’ own VR hardware. My job was to design an online study capable of replicating the results of a similar study previously conducted in a lab environment.

Research Questions

The main goal of this experiment was to see if an unmoderated online study could replicate the results from a full-controlled lab experiment. In order to do this, we needed to learn how to conduct a successful VR study online, despite very little previous research on the subject. Lastly, I was personally interested in learning how we could use adaptive feedback in thee virtual environment to help the participant complete all the research tasks successfully.

- How can a lab-based VR experiment be adapted into an unmoderated, online study?

- Can the online study replicate the results from a lab study?

- How can we replicate “in the moment” feedback, normally provided by a research moderator?

Online Study Considerations

I used existing literature about conducting unmoderated online research from the HCI and UX domains to develop the procedure and application for this online study. Based on this information, we decided to conduct our research on Amazon Mechanical Turk (MTurk) and Prolific. We also decided that all data should be automatically uploaded to a data server by the VR application to prevent any data loss. However, there are some additional challenges when designing an online study for VR. These include ensuring the application is device agnostic, the software is easy to install and launch, and the instructions and controls are easy to learn.

One notable difference between an in-person lab study, and an online VR study is that in the lab, the researcher can teach the participant how to use the controls to perform the task. This allows them to adapt their instruction to the needs of the user and repeat the instructions until an understanding is reached. The researcher can also intervene when the participant is performing the tasks incorrectly. To replicate this process in VR, I designed adaptive instructional feedback that was automatically displayed when the participant performed one of 5 common incorrect actions (as determined by pilot testing.)

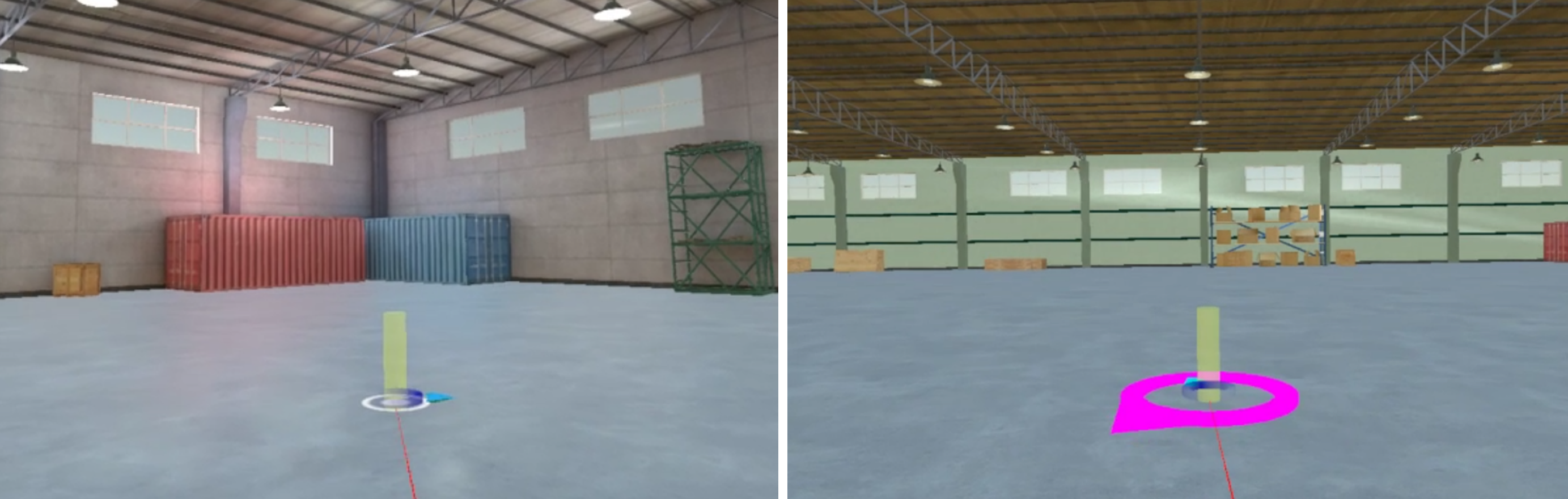

Demonstration of triangle completion task

Task & Variables

The task set by the lab study was a triangle completion task which tested the participant’s ability to remember where they have been inside a virtual environment. First the participant was asked to teleport to three locations in the shape of a triangle. Then they were asked to point back towards the location they started from. The amount of error in their response was then measured. The study had three different independent variables, each with two levels: teleportation method, virtual room size, and path length. The experiment had a full factorial, within-subjects design with 6 trials of each treatment.

Data Analysis (In Progress)

Independent samples t-test will be conducted to determine if online study was able to replicate the results of the lab study. Specifically, the tests will compare error magnitude and angular error to see if differences existed between the groups. Additionally, an analysis of variance will be conducted on the online group to see if the results of the original lab study are replicated. The results of the original lab study can be found in the previously published paper entitled, “Teleporting through virtual environments: Effects of path scale and environment scale on spatial updating”.

To test the effectiveness of the adaptive instructional feedback, regression analysis will be conducted with error magnitude and completion time as dependent variables, and feedback types and quantity as predictors.

Lessons Learned

During the study, we faced several challenges associated with online data collection. One was that participants often falsified their Head-Mounted Display (HMD) ownership on MTurk. We found that it was much easier to find genuine VR HMD owners on Prolific. Another challenge was linking data from web-base Qualtrics surveys to VR data. To solve this problem, we assigned each participant a unique ID that they entered into the surveys and when launching the VR application. We also learned that it is very difficult to know if the study was completed as designed when conducting research online. For example, the participant could experience technical difficulties that render their data unusable. To mitigate this, we added survey questions where participants could report any problems with the hardware or software for easier data filtering.

Future Work

Preliminary data analysis has shown that the online study adequately replicated the results of the lab version. Therefore, we are currently developing a new online experiment to test the effects of performance feedback on the triangle completion task in VR.